Tag: Moved Reality

-

May I introduce IRE?

—

by

What would it be like to explore the world as a small kid? The quest of answering this question sounds like a perfect job for the Oculus Rift. For all those who don’t know the Oculus Rift, it is a display which you can wear on your head. Of course, there have been already similar…

-

IRE – Overview (Part 1 of 7)

—

by

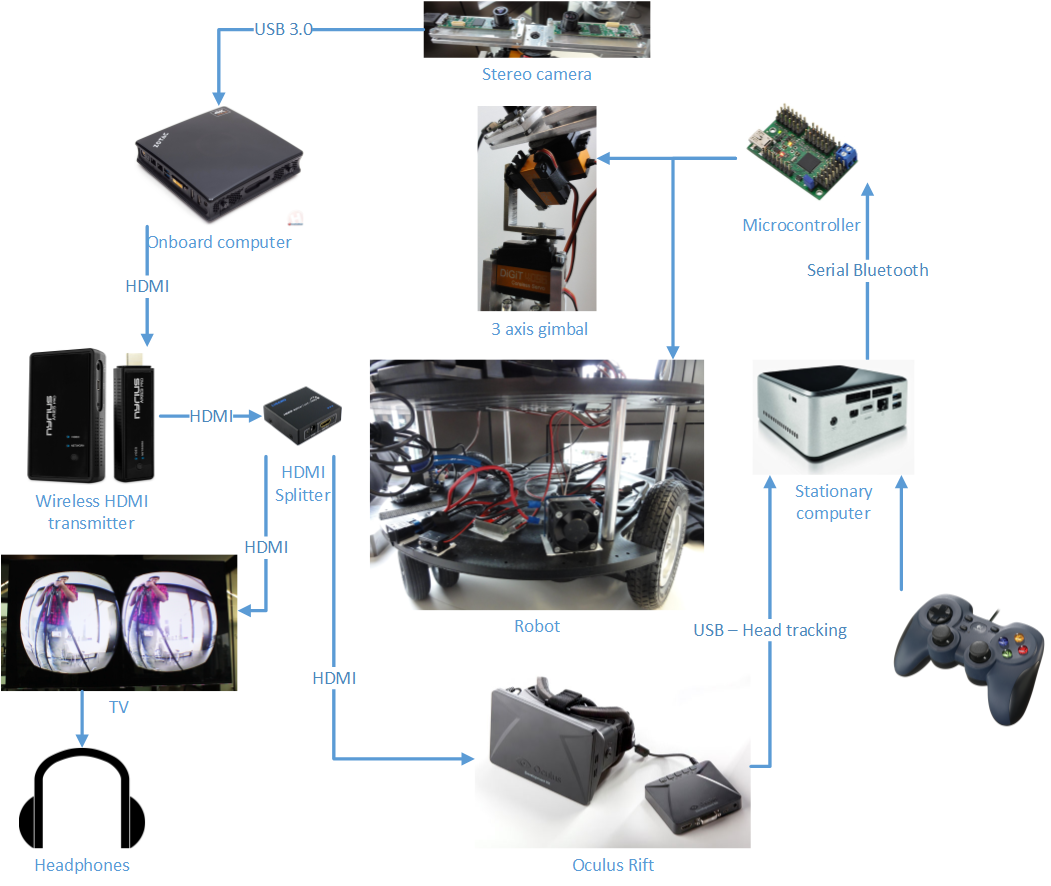

The post “May I introduce IRE?” has already explained the basics of what IRE is. This post will be the start of whole series of blog posts about my journey of doing this project. Index Diagram The following diagram will give a short overview of the IRE’s system: The whole system is split in two…

-

IRE – Stereo camera (Part 2 of 7)

—

by

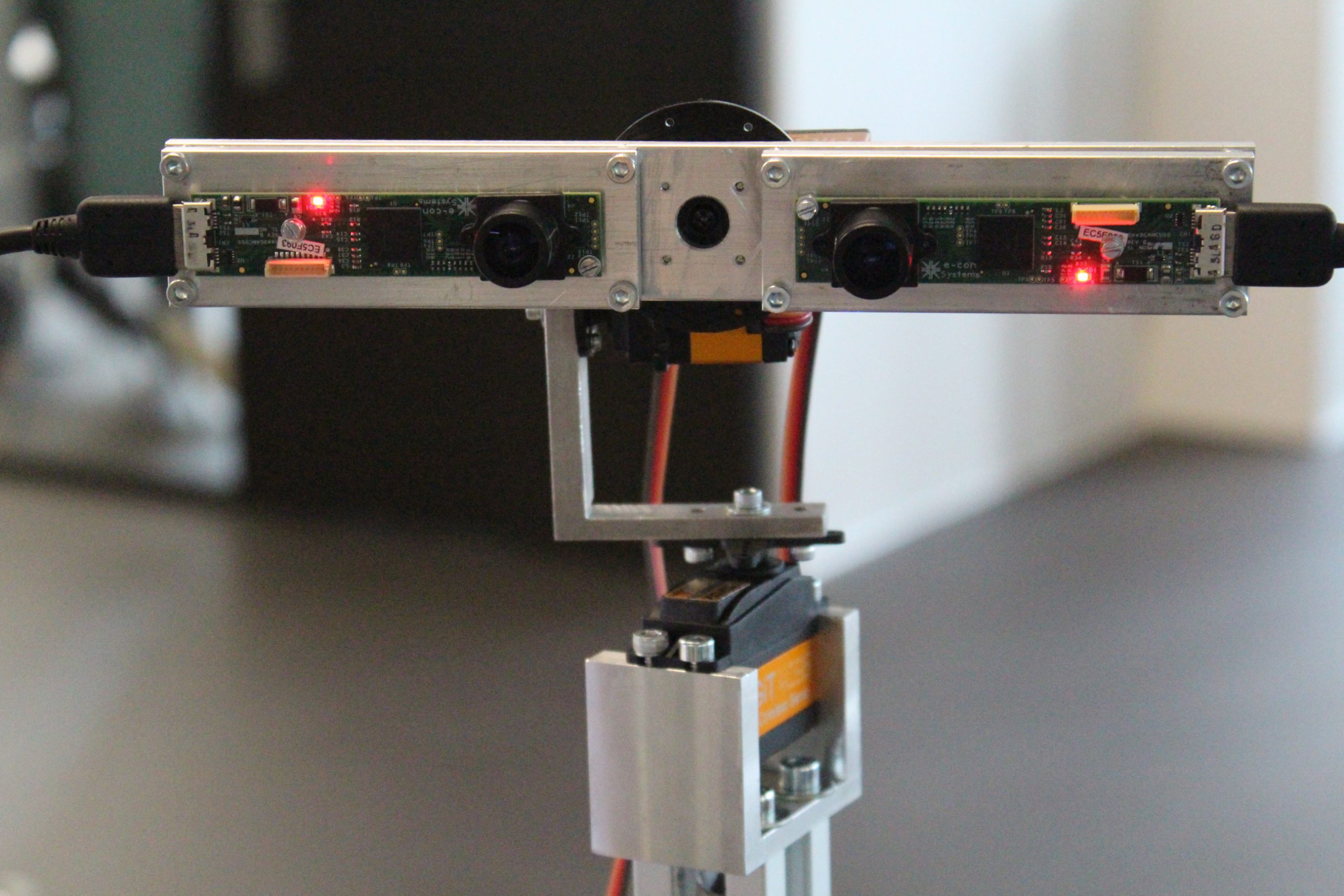

In order to match the Rift specifications as closely as possible, I had some very challenging requirements for cameras: Most standard webcams, such as those made by Logitech, don’t have 60 fps. The famous PlayStation 3 Eye camera has that high frame rate, but only at 640×480 pixels. Most onboard cameras would have 60 fps,…

-

IRE – Video & audio transmission (Part 3 of 7)

—

by

How can I wirelessly transmit 1080p@60fps with short latency? That was one of my biggest questions. Most FPV systems are analog, which results in a bad image. That’s why I wanted to have a digital solution. First I thought of using Wifi, but soon I realized that it would have too much latency. Some people…

-

IRE – Media processing (Part 4 of 7)

—

by

The special lenses of the Oculus Rift require the image to be distorted. This can be done with the official SDK. Its documentation is quite good, but luckily I’ve found the upcoming Oculus Rift in Action book with its nice source code examples. For my code I used the Hello Rift example as a base.…

-

IRE – Camera Gimbal (Part 5 of 7)

—

by

Of course I wanted to make use of Rift’s amazing head tracking and syncing the camera orientation with it. Using some static fisheye cameras to compute the right image for matching spot would have been neat. Such a solution would eliminate problems with quick head movement and latency. But omnistereo imaging is quite difficult for…

-

IRE – Control system (Part 6 of 7)

—

by

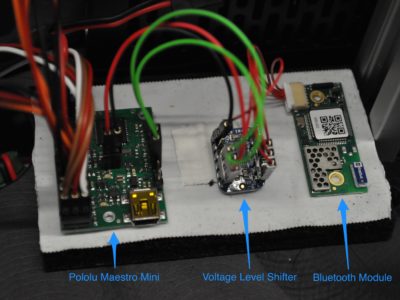

The last post covered how the video signal is transmitted. However, a communication channel back to the robot is also necessary. Over this channel the signals for the gimbal and the robot are sent. The smartest solution would have been to transmit the USB of the Oculus Rift and the gamepad directly to the onboard…

-

IRE – Conclusion (Part 7 of 7)

—

by

Overall I was really impressed with how well the system worked, and was also rather proud of myself. As I built it for our open day, the system had to work all day long with many different people. In this regard it worked perfectly – the children especially went crazy for it; it was even…